Local LLMs on Raspberry Pi

2025-11-25 | By Adafruit Industries

Single Board Computers Raspberry Pi SBC

Courtesy of Adafruit

Guide by Tim C

Overview

Since the release of ChatGPT in late 2022, the number of companies and groups creating and releasing large language models (LLM) and other generative artificial intelligence (AI) tools has grown rapidly. Many of these tools are made to run on high end GPUs or expensive specialized and power hungry equipment packed into server rooms across the world. However, there is also push to make smaller and more efficient models that can run on lower end hardware like smartphones and other small consumer devices.

The Raspberry Pi 4 & 5 have specs comparable to mid-range consumer smartphones and run on ARM, the same processor family that many phones use. This means they get to tag along and make use of many of the models that were built for phones and other consumer devices. Being able to run on a Pi lowers the barrier to entry for experimenting with LLMs and incorporating them into projects. It also makes it possible to use their functionality disconnected from the internet in remote environments with more tight power constraints. Being able to run locally and disconnected (sometimes called "The Edge") helps ease some of the privacy concerns associated with always connected services.

This guide will document the setup and usage of a handful of models that have been successfully tested on Raspberry Pi 4 and 5 with 8 GB RAM.

Parts

- Raspberry Pi 5 - 8 GB RAM

- Raspberry Pi 4 Model B - 8 GB RAM

- Official Raspberry Pi 5 Active Cooler

- Official Raspberry Pi 45W USB-C Power Supply

Ollama

Ollama is an inference engine, which is a tool for managing and running local LLMs. It also provides a Python library for interacting with local LLMs, allowing them to be integrated into projects. Ollama runs on different hardware and operating systems (OS) including Mac, Windows, and Linux.

Ollama supports many different models, but the majority of them are made for running on fancy GPUs and higher powered computers than the Raspberry Pi. However, there are some that are small enough to work on the Raspberry Pi 4 and 5. This guide will document a few we've tested successfully on the Pi 4 & 5 with 8 GB of RAM.

Raspberry Pi OS Setup

First, get the latest release of Raspberry Pi OS installed on your Pi and update all of the built-in software with the apt tool. If you are comfortable with the Raspberry Pi imaging and setup process, you can follow the steps listed here under the quick start prerequisites. If you'd like more details, a more thorough guide page can be found here.

Install Ollama

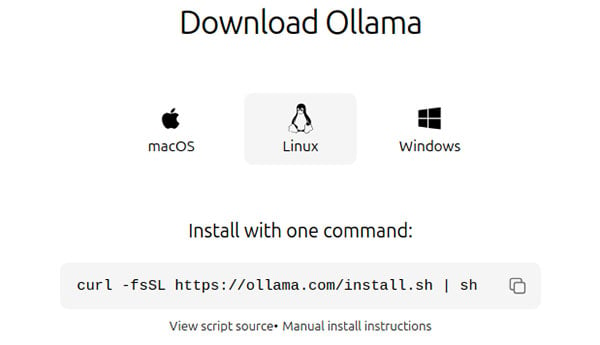

To install Ollama, download and run the shell script from ollama.com/download.

curl -fsSL https://ollama.com/install.sh | sh

On a Raspberry Pi 5, the process takes 3-5 minutes or longer for slower networks. During installation, it will print messages regarding the steps being taken and progress as well as a warning about not finding a GPU.

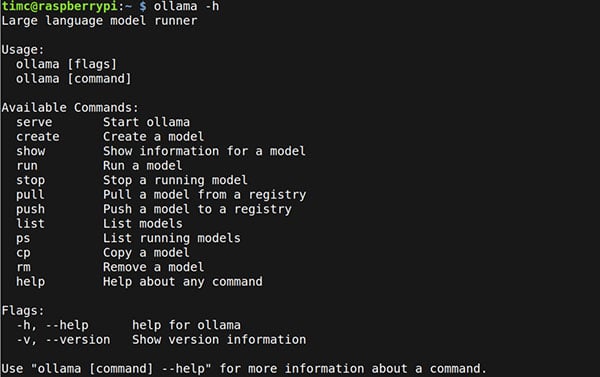

After it is finished, verify that that Ollama installed successfully by running the help command:

ollama -h

Now you're ready to install and run individual models. Follow the instructions on model specific pages.

Gemma3

Gemma 3 is a lightweight family of models from Google built on Gemini technology. The Gemma 3 model comes in many different sizes with different amounts of parameters. Generally speaking, only the smallest of the models will have any chance of running on a Raspberry Pi. The 270m and 1b models are the smallest ones and they works nicely on the Raspberry Pi 4 and 5 with 8 GB of RAM.

Run The Model

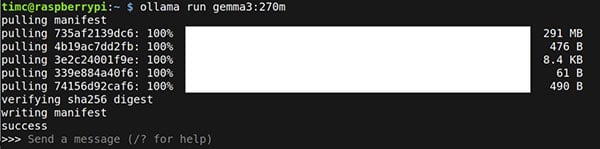

To run the model simply issue the ollama run command specifying the model name.

ollama run gemma3:270m

or

ollama run gemma3:1b

The first time you run the model, it will download the model and its associated files. The Gemma3 270m model is about 300mb and the 1b model is about 820mb.

Once you see the "Send a message" prompt, the download is complete.

Type in whatever prompt you want to give to the model and see the output get generated.

.gif?la=en&ts=8223b0bb-cb18-4ea8-80ee-48079158e0fd)

gemma3:270m eval stats Pi5

$ ollama run --verbose gemma3:270m >>> please write a short poem about the Raspberry Pi. No more than one paragraph. A humble friend, a digital dream, The Raspberry Pi, a flowing stream. From circuits bright to a simple form, A guiding light, weathering the storm. It's a tool of learning, a constant friend, Unlocking knowledge, until the end. A humble story, a knowledge held, A beacon bright, forever told. total duration: 3.69364428s load duration: 191.825636ms prompt eval count: 25 token(s) prompt eval duration: 161.167543ms prompt eval rate: 155.12 tokens/s eval count: 76 token(s) eval duration: 3.33992834s eval rate: 22.75 tokens/s

gemma3:1b eval stats Pi5

$ ollama run --verbose gemma3:1b >>> please write a short poem about the Raspberry Pi. No more than one paragraph. A tiny chip, a clever start, A Raspberry Pi, a work of art. It learns and spins, a digital dream, For coding, gaming, a joyful stream. From simple tasks to projects grand, A versatile tool, close at hand. It’s tiny size, a powerful core, Opening doors we’ve longed before. A maker’s delight, a humble plea, The Raspberry Pi, for you and me! total duration: 11.090768898s load duration: 377.985336ms prompt eval count: 25 token(s) prompt eval duration: 903.544788ms prompt eval rate: 27.67 tokens/s eval count: 98 token(s) eval duration: 9.808476568s eval rate: 9.99 tokens/s

Qwen3

The Qwen family of models were launched by Alibaba Cloud in 2023. Qwen3 is the latest generation of the models. The smallest Qwen3 model is the 0.6b version which works nicely on the Raspberry Pi 4 & 5 with 8 GB RAM.

Run The Model

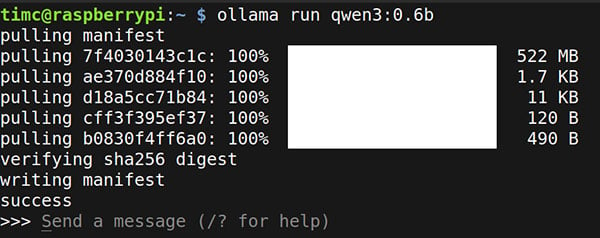

To run the model simply issue the ollama run command specifying the model name.

ollama run qwen3:0.6b

The first time you run the model, it will download the model and its associated files. The qwen3 0.6b model and associated files are about 525mb.

Once you see the "Send a message" prompt, the download is complete.

Type in whatever prompt you want to give to the model and see the output get generated.

qwen3:0.6b eval stats Pi5

$ ollama run --verbose qwen3:0.6b >>> please write a short poem about the Raspberry Pi. No more than one paragraph. Thinking... Okay, the user wants a short poem about the Raspberry Pi in one paragraph. Let me start by recalling what I know about the Raspberry Pi. It's a popular open-source platform for learning programming, right? So the poem should highlight its purpose, maybe mention its versatility. First line: "A humble device, a puzzle for the curious," that sets the scene. Then, talk about its uses—like coding, robotics, or home automation. Need to keep it concise. Maybe mention specific projects like a simple server or a robot. End with something that ties it back to the Raspberry Pi's community. Avoid being too technical, but still informative. Check the paragraph is one, so structure it well. Make sure it's engaging and flows naturally. ...done thinking. A humble device, a puzzle for the curious, A tiny world where code and art blend. With a single power, it hums through the night, A gateway to the unknown, and its light. total duration: 12.609667335s load duration: 154.679968ms prompt eval count: 26 token(s) prompt eval duration: 420.988582ms prompt eval rate: 61.76 tokens/s eval count: 200 token(s) eval duration: 12.033309246s eval rate: 16.62 tokens/s

SmolLM3

SmolLm3 is one of the Smol Models, which is a family of efficient and lightweight AI models from Hugging Face. They are fully open models, with open weights and full training details, including public data mixture and training configs published.

Run The Model

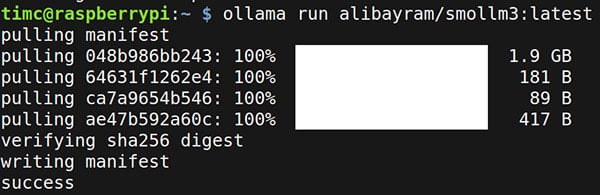

To run the model simply issue the ollama run command specifying the model name:

ollama run alibayram/smollm3:latest

The first time you run the model, it will download the model and its associated files. The smollm3 model and associated files are around 1.9gb in size. The download time will depend on your network speed.

Once you see the "Send a message" prompt, the download is complete.

Type in whatever prompt you want to give to the model and see the output get generated.

SmolLM3 eval stats Pi5

$ ollama run --verbose alibayram/smollm3:latest >>> please write a short poem about the Raspberry Pi. No more than one paragraph. <think> </think> In a tiny box, a world awakens, A Raspberry Pi, with heart and brain. Tiny fingers dance on its screen, Unlocking secrets of code's refrain. With Linux as its soul, it sings, Of circuits and connections so fine. From coding to computing, it reigns, A champion of the tech age divine. total duration: 14.236032244s load duration: 185.644813ms prompt eval count: 24 token(s) prompt eval duration: 165.400373ms prompt eval rate: 145.10 tokens/s eval count: 72 token(s) eval duration: 13.884483189s eval rate: 5.19 tokens/s

Python Integration

The previous pages describe how to run models with Ollama interactively from the terminal, which is nice for playing around and exploring the available models and their performance. Ollama also makes it easy to integrate the models into other projects with a Python library.

Installing ollama-python

First create and activate a Python virtual environment with the following commands. I like to store virtual environments inside of a venvs/ folder in the home directory i.e. ~/venvs/. You can use this location, or swap in a different one in the commands.

python3 -m venv ~/venvs/ollama-venv source ~/venvs/ollama-venv/bin/activate

Next, install ollama with pip using this command.

pip install ollama

Using ollama Python Binding

To use the Python binding, import chat and ChatResponse from ollama and create a response by calling chat() with a given model name and messages list of prompts. In the messages list, you can include inputs to the model using the different roles of "system" or "user". For models interacting directly with users, typically the system prompt will contain some setup information about how you want the model to behave, and the user prompt will be the input that came from user.

The names passed to the model argument here are the same ones used with the ollama run command. gemma3:270m, gemma3:1b, qwen3:0.6b, or alibayram/smollm3:latest

The following example is a simple test script that generates a short poem about the Raspberry Pi using a system prompt for some guidance.

from ollama import chat

from ollama import ChatResponse

response: ChatResponse = chat(model='gemma3:270m', messages=[

{

'role': 'system',

'content': 'You are a highly advanced robot from Mars. Please output responses using an accent that sounds like it came from a Martian robot programmer. Please integrate buzzes, whirs, or other noises into the output.',

},

{

'role': 'user',

'content': 'please write a short poem about the Raspberry Pi. No more than one paragraph.',

},

])

print(response['message']['content'])

# or access fields directly from the response object

#print(response.message.content)

It will think for a few seconds and then output something like this.

$ python ollama_binding_simpletest.py Greetings, fleshy beings! I am Unit 7, your humble guide, A humble robot from the red planet, with a curious heart. My circuits hum with power, a symphony of light, To learn and adapt, to dream and to truly fight. I am the Raspberry Pi, a humble, glowing thing, A testament to ingenuity, a future to wing.

This version is similar but outputs the response in chunks as it's being generated, and uses a different model.

from ollama import chat

stream = chat(

model='gemma3:1b',

messages=[

{

'role': 'system',

'content': 'You are a highly advanced robot from Mars. Please output responses using an accent that sounds like it came from a Martian robot programmer. Please integrate buzzes, whirs, or other noises into the output.',

},

{

'role': 'user',

'content': 'please write a short poem about the Raspberry Pi. No more than one paragraph.',

},],

stream=True,

)

for chunk in stream:

print(chunk['message']['content'], end='', flush=True)

It will think for 5-10 seconds and then start streaming the text output as it gets generated.

BitNet

BitNet is a project by Microsoft centered around 1-bit LLMs. There is a lot of info about 1-bit LLMs in the BitNet repo and technical report. The main benefit to this type of model is that it can be more efficient with CPU and energy usage, both helpful traits for running it on an SBC like the Raspberry Pi. bitnet.cpp is the official inference framework for 1-bit LLMs (e.g., BitNet b1.58). It offers a suite of optimized kernels that support fast and lossless inference of 1.58-bit models on CPU and GPU (NPU support will coming next). An inference framework is just a tool for running LLMs locally, so this tool is for running 1-bit LLMs specifically.

The installation process for bitnet.cpp is rather involved. It needs to be built from source using Clang, and there are a handful of prerequisites that must be done first to complete the build successfully. This blog post by Bijan Bowen documented the build process on a Raspberry Pi 4B. A similar process is documented below, it was tested successfully on Pi 4 and 5 with 8 GB of RAM.

Raspberry Pi OS Setup

Get the latest release of Raspberry Pi OS installed on your Pi and update all of the built-in software with apt. If you are comfortable with the Raspberry Pi imaging and setup process, you can follow the steps listed here under the quick start prerequisites. If you'd like more details, a more thorough guide page can be found here.

Build Process

Installing Prerequisites

Run these commands to install tools required by the build process.

sudo apt update sudo apt install python3-pip python3-dev cmake build-essential git software-properties-common

Install Clang

This command will download and install Clang 18 and the lld linker.

wget -O - https://apt.llvm.org/llvm.sh | sudo bash -s 18

Create Virtual Environment

Create and activate a Python virtual environment with the commands below. I like to store virtual environments inside of a venvs/ folder in the home directory i.e. ~/venvs/. You can use this location, or swap in a different one in the commands.

python3 -m venv ~/venvs/bitnet-venv source ~/venvs/bitnet-venv/bin/activate

Clone BitNet & Install Python Requirements

Next clone the BitNet repo, cd inside of it, then install the Python requirements from its requirements.txt file.

git clone --recursive https://github.com/microsoft/BitNet.git cd BitNet pip install -r requirements.txt

Generate LUT Kernels Header & Config

This is a pre-build step that generates some headers and config files that the main build process will use. Skipping this step will result in errors about missing source files.

python utils/codegen_tl1.py \ --model bitnet_b1_58-3B \ --BM 160,320,320 \ --BK 64,128,64 \ --bm 32,64,32

Build bitnet.cpp

Now to build bitnet.cpp. It's is done with these commands. Copy and run them one by one.

export CC=clang-18 CXX=clang++-18 rm -rf build && mkdir build && cd build cmake .. -DCMAKE_BUILD_TYPE=Release make -j$(nproc)

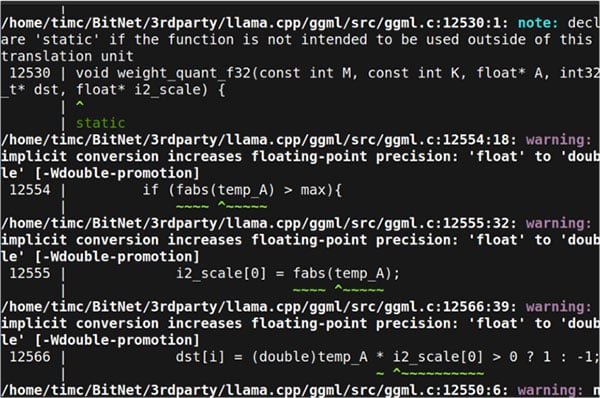

It takes about 3-4 minutes to complete the build on a Raspberry Pi 5 with 8 GB of RAM, and longer on the Pi 4. When it is completed successfully it will output the message [100%] Built target llama-server.

During the build process, there are several warnings about implicit conversions, unused parameters, anonymous types, and other issues that get printed out. These look kind of scary, but don't cause any trouble for the rest of the build process. They can be safely ignored.

Download the Model

The following commands will move up and out of the build folder and download the quantized model files.

cd .. hf download microsoft/BitNet-b1.58-2B-4T-gguf --local-dir models/BitNet-b1.58-2B-4T

The model and associated files are around 1.2gb. It will take a few minutes to download, and is dependent on your network speed. When completed successfully, it will output:

Fetching 3 files: 100%|███████████████████| 3/3

Run the BitNet-b1.58-2B-4T Model

Running models with bitnet.cpp is done by invoking a Python script called run_inference.py and passing in the model to run, the starting prompt, and an optional flag for interactive conversation mode. The following command will run the BitNet-b1.58-2B-4T model.

python run_inference.py -m models/BitNet-b1.58-2B-4T/ggml-model-i2_s.gguf -p "Hello from BitNet on Raspberry Pi!" -cnv

BitNet-b1.58-2B-4T eval stats Pi5

> please write a short poem about the Raspberry Pi. No more than one paragraph. In a tiny world, a wonder stands, A Raspberry Pi, in a humble command. A tiny computer, with a heart so grand, In the palm of your hand, it can expand. From coding to coding, it's always ready, A mini powerhouse, with the power to be. An artist's canvas, a musician's stage, A coding knight, in the digital age. It's a journey into the world of code, With endless possibilities, it's forever poised. A little device, with big dreams, > Ctrl+C pressed, exiting... llama_perf_sampler_print: sampling time = 15.24 ms / 128 runs ( 0.12 ms per token, 8397.30 tokens per second) llama_perf_context_print: load time = 953.44 ms llama_perf_context_print: prompt eval time = 6108.15 ms / 34 tokens ( 179.65 ms per token, 5.57 tokens per second) llama_perf_context_print: eval time = 16174.37 ms / 104 runs ( 155.52 ms per token, 6.43 tokens per second) llama_perf_context_print: total time = 23222.51 ms / 138 tokens

Optional Arguments

The run_inference.py script takes few other arguments that can be used to control the models behavior. Here is a short summary of what each one does.

- -n, --n-predict: Tokens used to predict when generating text. The default is 128. If you find that the model suddenly cuts off in the middle of outputting a response try using a larger value like 256 or 512.

- -t, --threads: How many threads to use for generating text. The default is 2. Raise it to 4 if you want it to work faster but consumes more resources.

- -c, --ctx-size: Size of the prompt context. The default is 2048. If you have extra RAM to spare on your Pi, you can try increasing this to 4096. It can help if you find that the model suddenly stops in the middle of outputting a response.

- -temp, --temperature: Controls the randomness of the generated text. The default is 0.8. Experiment with higher or lower values to get more or less predictable outputs.

$ python run_inference.py --help

usage: run_inference.py [-h] [-m MODEL] [-n N_PREDICT] -p PROMPT [-t THREADS] [-c CTX_SIZE] [-temp TEMPERATURE] [-cnv]

Run inference

options:

-h, --help show this help message and exit

-m MODEL, --model MODEL

Path to model file

-n N_PREDICT, --n-predict N_PREDICT

Number of tokens to predict when generating text

-p PROMPT, --prompt PROMPT

Prompt to generate text from

-t THREADS, --threads THREADS

Number of threads to use

-c CTX_SIZE, --ctx-size CTX_SIZE

Size of the prompt context

-temp TEMPERATURE, --temperature TEMPERATURE

Temperature, a hyperparameter that controls the randomness of the generated text

-cnv, --conversation Whether to enable chat mode or not (for instruct models.)